Utilising Azure Kubernetes Services for on-premise to Azure migration

Introduction

A large player in the auto insurance domain have undertaken a massive digital transformation project to migrate their applications and services from on-premise into Microsoft Azure Cloud. The on-premise system consisted of a critical external-facing, multi-tenant web application and several internal web applications built using ASP.NET Web Forms. The business layer was developed in C# and exposed to the REST APIs and SOAP services as class libraries and DLLs. To cope with the high data loads, the REST APIs loaded the incoming requests into intricate queues, which were then processed by consumer services. In addition, there were integrations to various external third-party systems. The backend consisted of several SQL server databases.

Key Issues

- Various services developed over the years wrote to various databases. These databases were kept in-sync using a custom synchroniser. A significant amount of business logic was hidden in the synchroniser.

- There was no separation of concerns and services directly accessed the databases in most cases.

- The on-premise infrastructure was not cost effective and required significant resources for maintaining it.

- Although regular backups were taking, no disaster recovery process was in place leaving the system susceptible to major outages.

Success Criteria

The goal of this multi-year project was to modernise all the services and web applications to the latest technologies and migrate them into Microsoft Azure cloud, while ensuring there was no disruption to existing services.

Recommendation

Databases

We recommended the use of Azure SQL Managed Instance so as to support all the SQL agent jobs that were required to run on the databases. A database consolidation exercise was undertaken to compare the schemas of the multiple databases and a plan was created to decommission the synchroniser. One database was identified as “to-keep” and all services/applications writing to other databases were evaluated and updated to write to the single database. This effectively halved the complexity of the backend system and made verifying the data integrity a lot more manageable.

The environment had a similar issue with data-warehouses. Several data-warehouses were created at various times to cater for various business requirements. This resulted in holding redundant information in multiple places causing discrepancies in reporting. Our team of data warehouse developers studied the schemas of three such data warehouses, found all the common functionality and consolidated them into a single data warehouse, which then acted as a single source of truth.

Backend Business Logic

All the business logic spread across various applications was consolidated into a single “Business Manager” repository. This repository was further segregated into areas like admin, core, reporting etc. A separate data layer repository was created to consolidate data access to the single transactional database and data warehouse identified above. Both data access and business logic libraries were published as .NET Standard NUGET Packages, which could be consumed by all the client APIs.

Web Services

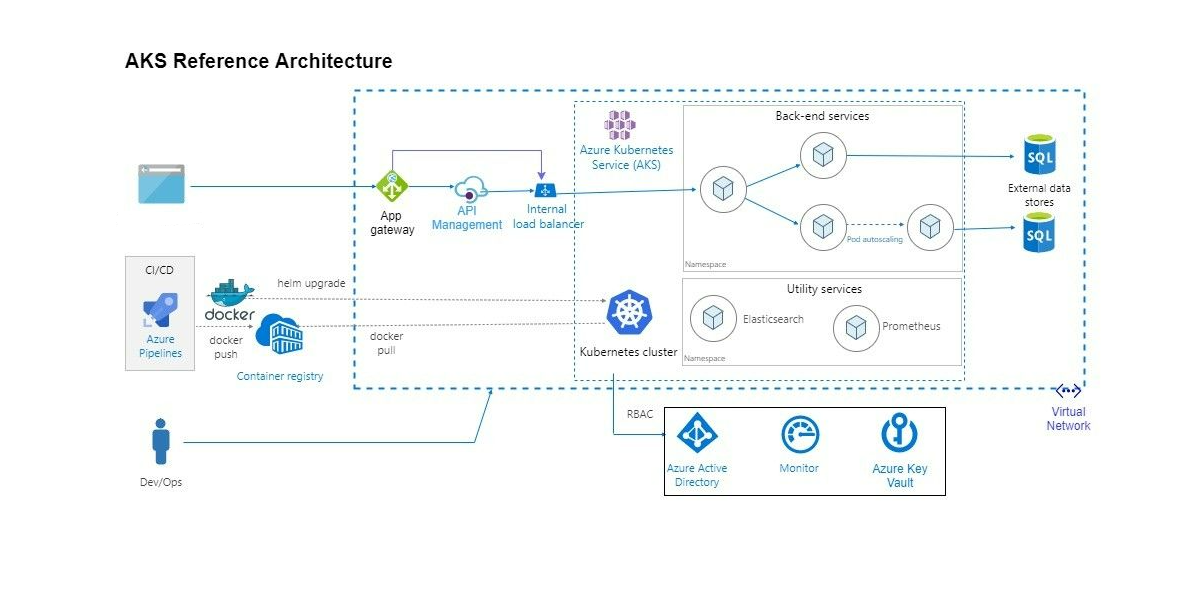

All SOAP web services were re-written as REST APIs using the .NET Core framework and existing REST APIs were updated to use the newly published NUGET packages for business logic. This was the longest exercise as each service revealed yet another unknown business module that needed to be standardised. All the webservices were published into Azure Kubernetes Service (AKS).

Web Applications

We recommended all new web applications be created using ReactJS as the front-end technology and utilising the newly created web APIs. A redesigning exercise was undertaken to refresh the look and feel of the client-facing application. All functionality from the existing web application was migrated and a whole new reporting system was implemented using ReactJS. This eliminated the antiquated SSRS reports that were used by the customers. The new reporting system allowed the users to interact with the report data on-screen and provided filtering, grouping and searching functionality along with the ability to export the reports into various formats. All administrative functions, which were being performed by various internal applications, were consolidated into a single admin UI and built using ReactJS as the front-end. The applications were deployed into Azure storage account and a custom domain name was applied. This approach effectively decoupled the development of front-end and backend allowing for both sides of the project to progress in parallel. A detailed explanation of this project is given in Section 4 under ReactJS application question.

Azure Services

We utilised Azure DevOps to the maximum, starting with Wiki for project documentation to boards for managing the monthly sprints. CI/CD pipelines were configured for all projects to ensure continuous integration/deployment to selected environments. External client authentication and authorisation were handled by a custom built identity server and Azure Key Vault was utilised to store application secrets. Docker images were created and pushed into Container Registry, which is then pulled by the each release for deploying into environments.

Outcome

The project was divided into two stages

- Stage 1 consisted of modernising the client-facing application and services and business logic associated with it.

- Stage 2 consisted of modernising the internal applications and migrating the remaining services.

We have successfully delivered Stage 1 and are in the process of finishing up Stage 2 for an end-of-year deadline. The service desk team has been trained on the Stage 1 and preparation is underway to on-board the external customers into the new application.